Bringing Sketching Tools to Keychain Computers with an

Acceleration-Based Interface

Golan Levin and Paul Yarin

MIT Media Laboratory

20 Ames Street E15-447

Cambridge, MA 02139

{golan, yarin}@media.mit.edu

ABSTRACT

We report the use of an embedded accelerometer as a gestural interface for an extremely small ("keychain") computer. This tilt- and shake-sensitive interface captures the expressive nuances of continuously varying spatio-temporal input, making possible a set of applications heretofore difficult or impossible to implement in such a small device. We provide examples of such applications, including a paint program and some simple animation authoring systems.

Keywords

gestural interfaces, accelerometers, keychain computers

INTRODUCTION

The last few years have witnessed a burgeoning interest in extremely small computers such as the Bandai Tamagotchi [1], the Xerox ParcTab [7], and a wide variety of miniature game machines, calculators and pagers. Sometimes called "keychain computers" because their exceptionally small size (generally two to four centimeters) permits them to fit comfortably on a ring of keys, these miniature computers are subject to severe constraints on the kinds of interface technologies available for acquiring user input.

Figure 1. Some keychain computers. Note the exclusive use of buttons.

The interfaces conventionally afforded by desktop and palmtop computers, such as the mouse, pen, touchscreen, joystick or trackball, are impractical at the extremely small scale of the keychain computer. Pens or fingertips on touchscreens occlude miniature displays, for example, while joysticks and trackball components may consume an impractical amount of space on a miniature device. For these and other reasons, designers of keychain computers have almost exclusively adopted the push-button as their sole means of capturing user input.

Buttons, unfortunately, are an inadequate means of capturing expressive gestural input. We believe that developers of very small computers, restricted to using an interaction technology which essentially predates the graphical user interface, have focused their efforts on application areas (such as retrieving phone numbers) which do not require expressive input. In so doing, we believe they have overlooked an area that is highly interesting, but predicated on gestural input: sketching and doodling.

We were motivated to design a physical interface that could support the capture of gestural input on as small a device as possible. We seized on the idea that the spatial movement of the device itself could be used as a means for creative expression. In this short paper, we present two design iterations of a miniature computer with an embedded accelerometer-based interface that meets our criteria.

BACKGROUND

Several researchers have sought alternatives to conventional

interfaces for Personal Digital Assistants (PDAs) and other portable displays

by affixing accelerometers or other tilt sensors to them; important examples

include [2, 3, 4, 5, 6]. These studies have principally focused on the

utility of tilt-based interfaces for scrolling through menus and lists

[2, 3, 5], browsing pages of text [5, 6], and navigating maps [5]. The

use of tilt as an expressive input for drawing and sketching tasks, by

contrast, has been largely overlooked.

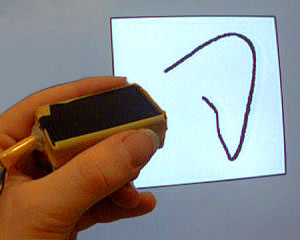

Figure 2. We discuss the use of tilting and shaking as a means of drawing.

Our prototypes capture continuous values for both tilt and shake in order to support creative sketching and doodling. We use tilt data as a means of suggesting the illusion of a gravity-aware system, for which there is precedent in [4, 6]. Unlike [2, 3, 4, 5, 6], however, we have found a use for shaking as a means of interacting with graphical objects. Although high-frequency acceleration effects are generally undesirable in navigation tasks such as scrolling, they can be important components of an expressive gesture. We incorporate the derivatives of acceleration (jerk and jounce) as impulse forces in our graphics simulations, using them to influence (for example) the animation of graphical elements or the width of a line.

THE SHAKEPAD

We have built two miniature prototypes, called Shakepad-I and Shakepad-II, which are intended for authoring drawings and simple animations. Both prototypes are small devices that a user can hold between the thumb and forefinger.

Users operate a Shakepad by gently or vigorously shaking the device in order to create a variety of animated graphic displays. In our most basic application, the user can draw a sinuous static line; in other applications, the user can influence the animation of a system of simulated spring-loaded particles, direct the rhythmic movement of a sequence of responsive rectangles, or influence a continually-changing trail of glowing elements.

Figure 3. The Shakepad-I prototype in front of its computer display. Tilting and shaking the device controls the path and thickness of the line.

The Shakepad-I is tethered to a Macintosh computer. Custom software written in Macromedia DirectorÔ processes serial data from the sensor inputs and generates the graphic display on the computer’s monitor. This prototype served as a ‘proof-of-concept’ demonstration that shaking could be used as an expressive input for sketching and doodling; it was useful, moreover, in rapidly developing the display mathematics. Because it did not have an integrated display, however, it left open questions about the potential value of coincident input and output.

Figure 4. Actual sketches made with the Shakepad-I.

The wholly untethered Shakepad-II, by contrast, performs all computations locally on a PIC microprocessor. In addition to acquiring sensor data, this processor also drives the pad’s 8x8 element LED graphic display. Although this low-resolution display has its own appeal, we expect to increase its resolution and reduce its size in the future.

Figure 5. The Shakepad-II prototype out (left) and in (right) its enclosure.

Both Shakepad prototypes receive their gestural input from acceleration sensors (accelerometers) mounted inside or under their small enclosures. Such sensors are readily available as self-contained ICs and are commonly used in applications such as automobile airbag release systems; the sensors we used are sensitive to accelerations in the range ± 5g, which conforms well to the range producible by the human body. The Shakepad-I uses two accelerometers mounted at right angles to one another, in order to sense movements in both the x and y directions; the Shakepad-II uses a single-chip 2D accelerometer for the same purpose.

CONCLUSIONS

If keychain computers are to offer the functionality of paint programs or other expressive instruments, they must have feasible interfaces for the capture of expressive spatio-temporal input. For this application, a continuous-control interface is not merely a sufficient replacement for buttons or pens, but a necessary one. The accelerometers embedded in the Shakepad prototypes are a promising technology for such interfaces, as they are compact, do not occlude a small display, allow for perceptually coincident input and output, and most importantly, permit the input of continuous kinetic gestures in an intuitive and natural way.

ACKNOWLEDGMENTS

We thank Hiroshi Ishii, John Maeda, Clay Harmony, Rich Fletcher, Rob Poor, Joe Paradiso, Andy Dahley, Romy Achituv, Scott Snibbe, Kelly Heaton, and Joey Berzowska.

REFERENCES

- Bandai Corporation. Tamagotchi keychain computer, 1996. <http://www.bandai.com/tamagotchiarea/>

- Fitzmaurice, G.W., Zhai, S., Chignell, M. Virtual Reality for Palmtop Computers. Transactions on Information Systems, Vol. 11, pp. 197-218, July 1993.

- Harrison, B.L., Fishkin, K.P., Gujar, A., Mochon, C., Want, R. Squeeze Me, Hold Me, Tilt Me! An Exploration of Manipulative User Interfaces, in Proceedings of CHI '98 (Los Angeles CA, April 1998), ACM Press, 17-24.

- Inami, M., Kawakami, N., Yanagida, Y. Physically Aware Devices with Graphical Displays. Program Tachi Lab, University of Tokyo, Siggraph ’98 Enhanced Realities.

- Rekimoto, J. Tilting Operations for Small Screen Interfaces. Proceedings of UIST ’96, pp.167-168.

- Small, D., Ishii, H. Design of Spatially Aware Graspable Displays. Extended Abstracts of CHI ’97, pp.367-368.

- Want, R., Schilit, B.N., Adams, N.I, Gold, R., Petersen, K., Goldberg, D., Ellis, J.R., and Weiser, M. An Overview of the ParcTab Ubiquitous Computing Experiment. IEEE Personal Communications, December 1995, pp.28-43.