Imagine a room filled with electronic devices—appliances, thermostats, computers, portable music players, PDAs—all of which can communicate wirelessly. This may not even require an act of imagination. Now imagine yourself with a handheld device in the middle of all this wanting to use it to communicate with just one of these devices. How will you do it? Will you select its name from a menu on a screen? Will you tap on an icon? Either way your attention will be focused on the device in your hand, not the true target of your intention. Your selection will be a selection by metaphor. Why not simply point at the thing you want to communicate with? Pointing is a natural extension of the human capacity to focus attention. It establishes a spatial axis relative to an agent, unambiguously identifying anything in line-of-sight without a need to name it. This brings our interactions with electronic devices closer to our interactions with physical objects, which we name only when we have to. Pointable Computing proposes that a visible laser be coupled to a handheld device and cheap sensors attached to potential objects of communication. Information may be conveyed along a laser beam, establishing a channel of communication along a perceivable axis of pointing. Ultimately the goal of this project is, through a subtle change in the focus of interaction, to foster decentralized computation in which individual computational objects scattered in the environment may be controlled directly rather than through a single hub. This kind of electronic autonomy curtails the ability of faceless authorities or proprietary standards to shape the future of computation.

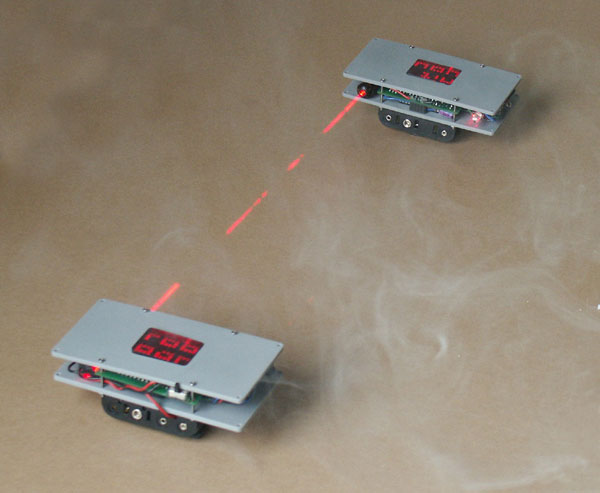

In a working prototype, Word Toss, two handheld devices are able to send short words back and forth on visible laser beams.

Motivation

When I started using computers, they did not have mice.

I felt close to the machine because I learned to understand all the layers

between the hardware and me. When GUI-based operating systems hit the

desktop, they completely unnerved me. Suddenly the computer that made

sense to me was couched behind a semi-spatial metaphor that elucidated

neither its workings nor the nature of space. I have, of course, come

to accept it. I have taken the time to try to understand the new layers

of operation that have been interposed between me and the machine. But

fundamentally, the spatializing of computational objects in screen space

remains an affront because it engages the user as a two-fingers and a

wrist. Anyone who has ever spent ten perfectly good seconds looking for

a cursor that she purportedly controls with her hand has felt the difference

between screen space and real space.

My work takes computation away from the screen and into the realm of full

physical involvement. It is by no means the first effort to do so. The

difficulty and expense of tracking points in space or the vagaries of

computer vision have hampered many previous attempts. Many of the successful

prototypes require very strict conditions of operation or a special setup

not widely available to the public. By contrast, Pointable Computing requires

only a very cheap and intuitive layer of technology to be applied to a

handheld device and an object in the world, and derives from those a robust

and useful spatial relationship without calibration.

There is an irony in the use of the words “active,” “interactive,”

and “reactive” to describe computational objects—both

physical and virtual. It is a common practice, as though nothing had those

qualities until the computer waltzed in and started endowing ordinary

objects with buttons and microphones. The truth is that non-computational

objects are far more active, interactive, and reactive than any working

computational version of the same. The reason is that in order to consider

an object computationally, we must derive data from it, and that means

outfitting it with sensors in some way. As soon as we do that, we chop

away all of the interactions we have with that object that are not meaningful

to the specific sensor we have chosen. No matter how many sensors we add,

we are taking a huge variety of interactive modalities and reducing them

to several. How could a simulation of a cup ever be as interactive as

a cup?

Some argue that adding sensors to a physical object does not constrain

its existing interactivity, but augments it electronically. I believe

that is true as long as the object remains primarily itself with respect

to the user and does not undergo some metaphoric transformation into a

virtual representation of itself (visual or otherwise) or into a semantic

placeholder. That is difficult to achieve, and cannot be done as long

as a user must consult a secondary source to determine the quality of

his interaction. To check a screen or even to listen to a tone to determine

the pressure with which I am squeezing an object supercedes my own senses

and reduces any squeezable object into a pressure sensor. In order for

a physical object to be augmented rather than flattened by computation,

the computation must occur (or appear to occur) inside the object and

the consequences of the computation be registered by the object. This

is a strong argument for ubiquitous computation, which challenges the

supremacy of the desktop computer, placing localized computation in objects

distributed throughout the environment.

Pointable Computing takes as its starting point an emerging reality in

which everyday electronic devices communicate wirelessly. These devices

already have identities tied to their functions, be they headphones, storage

devices, or building controls. They are not crying out for an additional

layer of interface. How can we address the new capacity of things to talk

to each other without further mediating our relationships with them? We

need the remote equivalent of touch, an interaction focused on its object

and containing its own confirmation. Pointable Computing offers that by

way of a visible marker, a bright spot of light. You do not need to consult

a screen to determine if you are properly aligned. It is apparent. The

receiver may also indicate that is has acquired the beam, but that indication

will always be secondary to the visual confirmation that the object is

illuminated.

As Norbert Weiner pointed out, any system containing a human being is

a feedback system. As a user, a person automatically adjusts his behavior

based on the overall performance of the system. What makes the Pointable

Computing a robust communication system is that the feedback loop containing

the human being is direct and familiar. The human eye has an area of acuity

of 1–2°, implying that narrow, beamlike focus is the norm, not

the exception for human perception. The rest of the visual field is sampled

by eye movements and then largely constructed in the brain. Tight visual

focus is the way we solve the problem of reference without naming in a

spatial environment. The feedback loop that enables the act of looking

entails our observing and correcting our proprioceptively-sensed body

attitude to minimize error of focus. It happens so quickly and effectively

that we do not even notice it. The same visual feedback loop can be applied

to a point of focus controlled by the hands. It is not quite as immediate

as the eyes, but it is close. And, as it turns out, it doesn’t suffer

from the kinds of involuntary movements that plague eye-tracking systems.

I have developed a few use cases to demonstrate the potential of Pointable

Computing:

Universal remote

The most obvious use of Pointable Computing would be to make a universal

remote. Pointing the device at any enabled object would turn the handheld

into a remote control for that object. On the face of things, this seems

to be a rather mundane application, and one that seems to run counter

to the program of endowing objects with individuality and escape from

metaphor. But consider a simple control consisting of a single pressure

sensor. This might be useful as a volume control or light dimmer, for

instance. Since there is nothing on the control to look at, the interaction

with the controlled device can be directed entirely at it while a thumb

controls the pressure.

Further, this kind of control can bring autonomy to a previously overlooked

device. For example, speakers are the source of sound. It would make sense

that to control their volume you would manipulate them directly. This

isn’t, however, the case. Instead we have to reach to a separate

box covered with controls and turn a knob. We know this drill because

we have learned it, but it makes sense only if understood as a case for

efficiency—all the controls are centrally located to save you the

footwork of walking to your speakers and the to save money in manufacture.

If the speakers were outfitted with pointable sensors, they would be controllable

from anywhere they were visible as fast as you could point at them, and

they would enjoy finally being addressed as the agents of soundmaking

instead of the slaves of a master console. This kind of distributed autonomy

and freedom from central control is exactly the condition that Pointable

Computing facilitates.

Active Tagging

Imagine yourself walking down an aisle of products. You see one you would

like more information about or two you would like to compare. You point

your handheld device at them and they transmit information about themselves

back to you. Why is this different from giving each product a passive

tag and letting an active reader look up information in a database? Again

the answer is about autonomy and decentralization. If the information

is being actively sent by the object scanned, it does not need to be registered

with any central authority. It means that no powerful agent can control

the repository of product information, and anyone can create an active

tag for anything without registering some unique identifier. Note also

that in this scenario we see the likely condition that a non-directed

wireless communication like BlueTooth would be useful in conjunction with

a Pointable. The two technologies complement each other beautifully.

Getting and Putting

In a vein similar to the Tangible Media Group’s mediaBlocks project,

it would make sense to use Pointable Computing to suck media content from

one source and deliver it to another. Here again it is not necessary to

display much on the handheld device, and one button may be sufficient.

An advantage in media editing that the Pointable has over a block is that

there is no need to touch the source. That means that it would be possible

to sit in front of a large bank of monitors and control and edit to and

from each one without moving. It may even make sense to use a Pointable

interface to interact with several ongoing processes displayed on the

same screen.

Ad-hoc networking

In this simple application, the Pointable is used simply to connect together

or separate wireless devices. If, for instance, you have a set of wireless

headphones which can be playing sound from any one of a number of sources,

there is no reason you couldn’t simply point at your headphones

and then point at the source to which you want to connect them.

Sun Microsystems likes to say, “The network is the computer.”

This is a fairly easy formulation to agree with considering how many of

our daily computational interactions are distributed among multiple machines.

Any form of electronic communication necessarily involves a network. The

shrinking and embedding of computation into everyday objects implies that

informal networks are being created in the physical fabric of our homes

and offices. If we assume that the network of wireless devices around

ourselves is essentially a computer, we must admit that we spend our days

physically located inside our computers. Being located inside the machine

is a new condition for the human user, and it allows the possibility of

directing computation from within. A pointing agent, a kind of internal

traffic router, is one potential role for the embedded human being.

Reactive surfaces

Reactive surfaces are building surfaces, exterior or interior, covered

with these changeable materials coupled to arrays of pointable sensors.

They make use of new materials that have changeable physical properties

such as LCD panels, electrochromic glass, OLEDs, or electroluminescents.

It would be possible, for instance, to write a temporary message on a

desk or wall or define a transparent aperture in an otherwise shaded window

wall. Such an aperture might follow the path of the sun during the day.